Paper Released: MusiXQA

MusiXQA: Advancing Visual Music Understanding in Multimodal Large Language Models

I’m excited to share our new paper, MusiXQA: Advancing Visual Music Understanding in Multimodal Large Language Models!

This project was motivated by my passion for music and my interest in how AI might understand symbolic music as humans do. I’m glad I turned that idea into action and had the chance to collaborate with friends along the way. What started as a side exploration grew into a collaborative effort that I’m truly proud of.

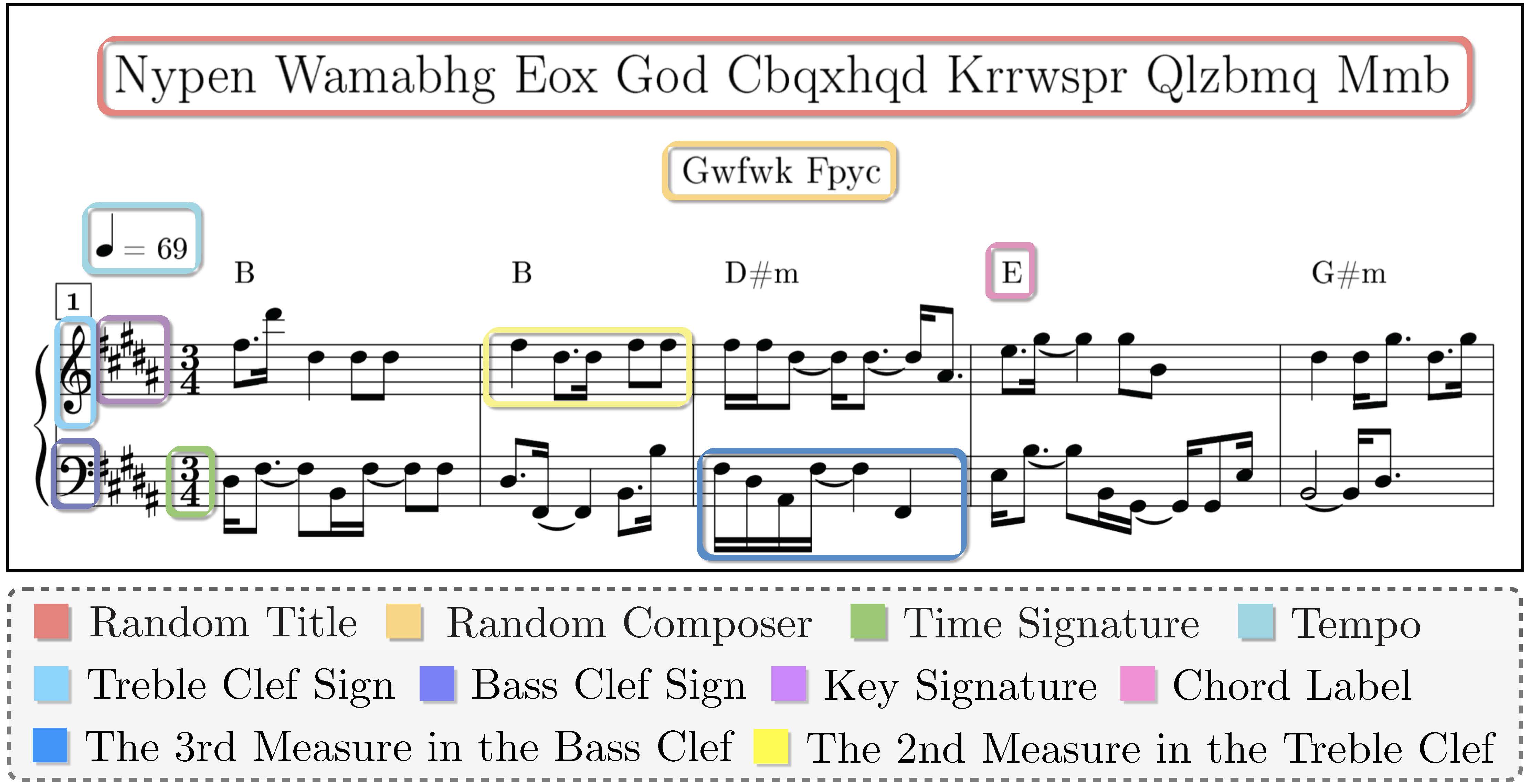

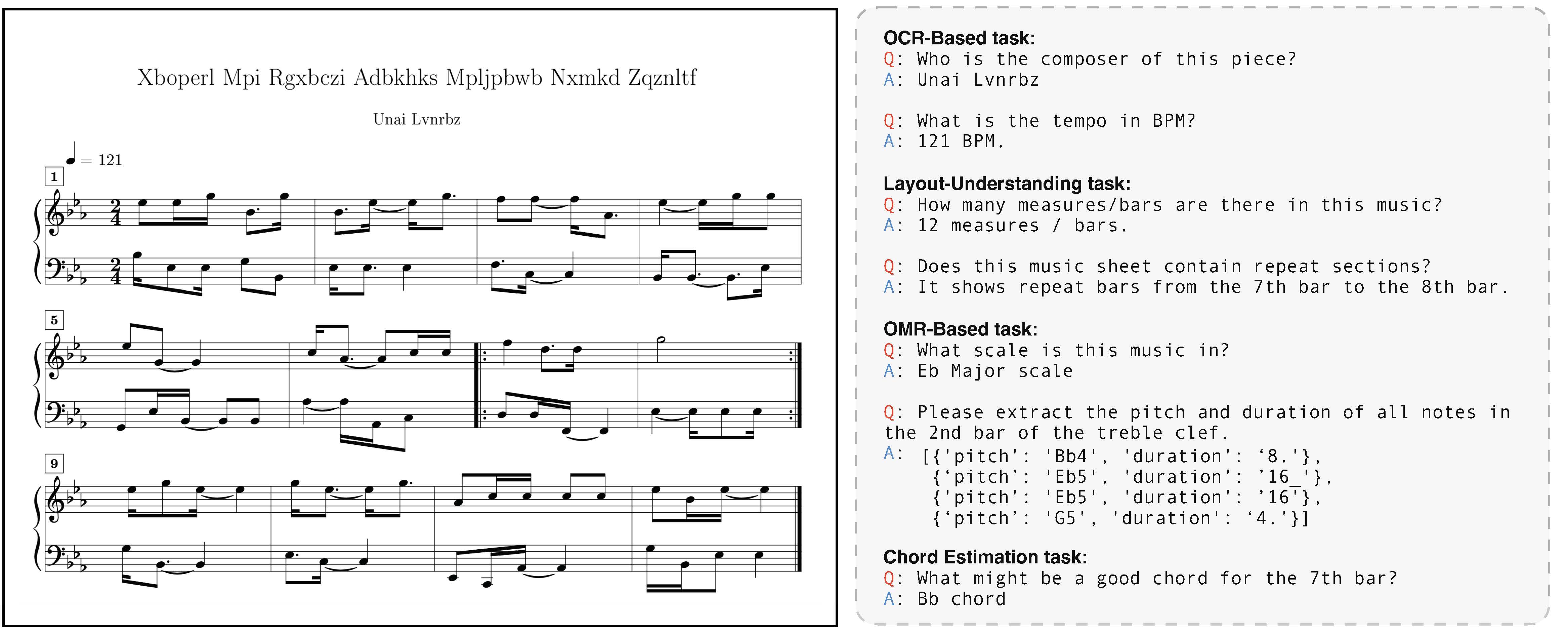

In this work, we introduce MusiXQA, the first large-scale benchmark for evaluating and improving MLLMs on music sheet understanding.

Alongside it, we release Phi-3-MusiX, a model fine-tuned on this benchmark, where we observed significant performance gains over GPT-4o on symbolic music QA tasks.

That said, raw model performance isn’t the heart of this project. What excites me more is the opportunity to explore a rarely studied task—using hand-designed data based on my own musical knowledge—and to watch a model gradually learn to read and interpret music sheets. It felt like replicating part of my own ability in an AI model. This experience deepened my belief that, with better data and serious computational effort, future large models could truly approach the level of musicianship I envision. I’m very happy to have contributed to this underexplored direction.